Database

As a result of the scraping part of the project, an input stream of pages, documents, images, etc. is built. This data must be stored in a database, which is properly designed and maintained for fast accessibility and futher analysis. We think this phase, in addition to the scraping of the data, are crucial steps in the success of the tool and need to be well designed and studied. In this section we will discuss a few metrics when deciding on the technology of choice and will leave the details of the data structure to the implementation phase.

SQL vs NoSQL

For a few reasons we think a NoSQL database is a better option for what a scraping engine is trying to achieve. Here are 5 key factors in our decision:

- A SQL database is best suited for a structured tabular data, such as accounting table or school grades. However if you are dealing with a more complex data structure with multi-level nesting and hierarchy, a NoSQL is usually a better option. The nature of data is the most important factor to consider in the decision.

- In a SQL database, you need to have all the information about the final data model, data types, relations and schema in advance. This information must be correct as if it is not any modifications to the data model would slow down or even stop the database from functioning. For example, after designing the project database in your SQL table and propagating 30M fields of data, one might decide to add Facebook shares field to their data. Modifying the schema of SQL table and adding Facebook shares to the corresponding database table will considerably decrease the speed for a period of time. This is not the case for a NoSQL database where infromation from Kickstarter campaign can be different than Indiegogo campain without following the same structure or format and stored in a single table in database.

- NoSQL databases are usually easy to scale horizontally. Horizontal scalability means you can add new servers and data storages to your distributed system. That’s where in a SQL database, a system’s capacity is only enhanced vertically when you add to your CPU cores, your RAM or Hard Disk.

- When it comes to big data processing and representations, NoSQL works much faster than a SQL database. Since many scraping projects need to deal with huge data and process or represent data in real-time, NoSQL is a better option.

- As a startup we think the scraping technology might need to start collecting data and change the model to react to the market requirements quickly when needed. For the reasons mentioned above in bullet point one, a NoSQL database where you do not need to close your data model design first thing in the development process works much better.

Managed vs Unmanaged

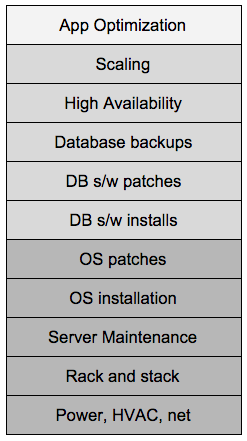

There are two styles of databases; managed versus unmanaged. It is a control-quality trade off. The unmanaged solution has your company managing the database, controlling a full stack of functionalities, as see in the image on the right.

A managed solution takes care of all layers and leaves the App Optimization to be taken care of by your company. The cost difference is not significant when all the maintenance and setup costs of unmanaged servers are included. For that reason we think a fully managed solution is probably a better fit for what the scraping engine needs at this stage.

In the case your company decides to move away from a fully managed solution there are always easy solutions to export all the information and developed software to be deployed on an internal platform.

Our Choice

Among NoSQL databases, DynamoDB, a database service provided by Amazon is our recommendation for the following reasons:

- DynamoDB supports both document and key-value data structures. This flexibility empowers the architecture design to comply with the nature of data at different situations and for different purposes

- DynamoDB has a pay-as-you-go pricing structure. This is a good fit for this project as it is a research and development project for your compnay, in the beginning, so the costs are low. As the platform grows and your company can secure financial resources, scaling the database can be done easily.

- DynamoDB can connect to Lambda for processing of the gathered information. Lambda provides sets of functionalities that can be triggered from different Amazon services. For example, updating number of facebook shares of project in 2014 archive can trigger a Lambda function which automatically updates the aggregated data of shares (i.e. sum of social network shares) of 2014, or trigger a push notification to followers of that project. Lamba can automate many tasks and reduce processing load of web portal or big data analysis engine.

- DynamoDB is readily available and scalable. The data storage can be easily expanded when required, and a few replications of the data provides accessibility to the information. You have the choice to request a very consistent copy of the data which hits the master copy to retrieve the data, or you can request the fastest copy from one of the replicas.

- With fine grained access control to the information, your engine can define different access control levels for different users in the organization with DynamoDB’s security features.

- DynamoDB only requires that a table has a primary key, but does not require you to define all of the attribute names and data types in advance.