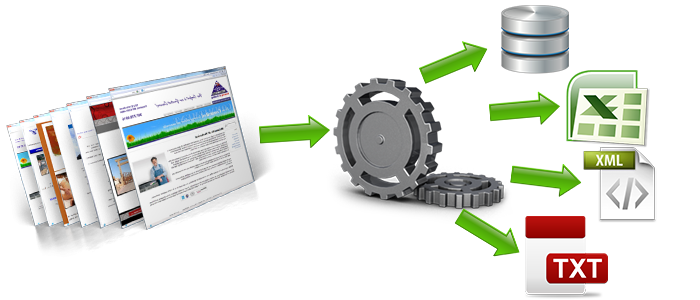

The source of data for any data centeric application can come from scraping different websites. While there are many third party companies offering web scraping systems, due to sensitivity of information and potential competitors, some companies might be willing to build an in-house package to have the intellectual property of its scraping engine. Although an in-house solution increases the initial development costs of the web scrapper, it yields to more control over the collected data and allows for further customization to each crowdfunding platform.

*Picture taken from http://webdata-scraping.com

In the world of web scraping, there are a few open source html parser and crawler frameworks, such as Jsoup, BeautifulSoup, Scrapy, HTML agility pack that are widely used by the community. We at Evenset recommend using a framework instead of writing scripts from scratch. We considered the following selection criteria to choose a scraping framework:

- Active community and continuous development and support

- Extensibility of framework

- Contributions from other companies to the source code to improve the library and adding more feature to it

- Having crawling features and ability to create spiders

- Having asynchronous features to send multiple requests to different URLs to reduce waiting time

- High speed in parsing HTML DOM

- Throttling features to avoid getting blocked by the crowdfunding platforms

Taking into account the above criteria, we recommend using Scrapy. Scrapy is built with python, one of the pioneer languages, and communities for parsing data and natural language processing. Scrapy is often in the top 10 highest contributed python applications on Github with a very active community. Many famous web scraping companies use Scrapy as their core engine. The framework is very comprehensive and our experience working with it has been very positive.

During the implementation of a scraping engine, the software development team should follow the best practices as recommended by Evenset. These best practices are established to improve extensibility and readability of the application. This ensures easier collaboration and faster adoption of the technology by system administrators and future software developer team members. The best practices recommended by Evenset are:

- Write modular code and avoid very long scripts to perform a single task. Modular implementation, with separate settings and configurations module, will help non-technical members of the team to modify or refine a few metrics in the crawlers behavior so it complies with the changes in the crowdfunding platforms. This functionality has many uses, for example if a crowdfunding platform were to change its resources path, (i.e. from domain.org/projects/* to domain.com/pledges/*), the system admin can change a configuration file to project the change rapidly.

- The creation of an extensive log of the whole data flow, to cover the cases where a crawler fails, when the data could not be processed or when the database was out of reach. This will help developers and system admins to detect and rectify any issues. If it is required, this logging mechanism can be extended to dispatch notifications or visualisations.

- As with any other source code, proper documentation and comments will be noted throughout the code, in addition to unit-tests to back each individual functionality. This will be helpful if the client is going to take over the development in future.

In addition to the above best practices, there are some challenges associated with scraping big data from non homogeneous platforms. These challenges along with our proposed solution are summarized in the following section.

Web Scraping Challenges

Performance and Speed

Since the targeted number of scrapped pages will constantly grow and the engine has the ability to send asynchronous concurrent requests to multiple pages, processing of all received data can introduce a bottleneck in the application and reduce the overall performance. Scrapy uses LXML library, which is written in C language and offers very fast processing of HTML content. In addition, the crawler can be distributed to several machines to increase scraping capacity. The implementation of the latter will depend on system’s requirements as we move forward with experimentation and usability tests.

Being Blacklisted

If a platform is being crawled by a powerful scraper, the host may start to throttle or block the connection to reduce the load on their resources. While respecting other platforms’ infrastructures, we recommend using combination of following approaches to avoid getting banned:

- Showing special attention to rules mentioned in Robots.txt (contains information if crawling is permitted and how often crawling is allowed) in the target platform.

- Avoid following links with ‘nofollow’ and ‘noindex’ HTML meta tags. Developers use these tags specifically for static pages and image/video files.

- Mixed crawling of multiple platforms instead of isolated crawling of each crowdfunding platform separately.

- If needed, adding intentional delay between each request to reduce high network load on crowdfunding platform infrastructure.

- Spoof the User-Agent so the platform assumes the crawler is a simple browser.

- Optimizing the crawling activities, e.g. only crawl the URLs which need an update and avoid scraping old archived URLs or crawling only relevant pages to avoid any honeypot detections (those links on the platform which are only visible to crawlers and not humans)

- Using proxy servers and rotating IP addresses.

- Only crawls relevant web pages, (i.e. domain.com/projects/* in the case indiegogo) to avoid any honeypot detection (those link on the platform which is only visible to crawlers).

- Incorporate some random clicks and navigations to mimic human behaviour.

Data Validation

While scraping helps automate data collection from several platforms, slight changes in the structure of the platform might lead to inconsistent data. For example, one might look for latest exchange rates of USD to CAD on different banking platforms. Since the exchange rate is essentially a number, the scraper is designed to look for a field in the page that has that number. Now assume that the webmaster of one of these banking platforms adds some characters such as “USD/CAD” to the exchange rate field on their platform. Since the crawler only scrape the content of html, the whole “USD/CAD” gets scrapped and if not validated, stored in the database. The best practice is to validate the data right before sending it to the pipeline that ends with database storage. If certain fields must be alphanumeric characters with exactly 6 letters length, the validation and cleaning functions should be applied before sending the data to the database. This stream validation avoids unnecessary scan of the whole database in the case of block or whole validation, which is costly and error prone.

The second layer of validation is applied when sending the data to the end users in the web app portal to cover for any missing errors in scraper validators and cleaners. This ensures that the users will not see any strange discrepancies on their portal.

Scrap Javascript Generated Content

Many modern platforms use JavaScript (or a JavaScript framework, e.g. jQuery or AngularJs) to fetch data from the remote server. The process starts with the server sending common files such as the basic HTML skeleton, CSS and images to the browser to form the basic structure of the page and then JavaScript framework will send another request to the server and retrieve the remaining required data to fill into the page. This makes each page load faster and the browser does not get refreshed when the user clicks on different links in the page (the same behaviour you see when you click to see the details of a receiving email on Gmail). However, crawlers and even search engine robots usually do not load JavaScript, hence can not get proper data from the server and the scraping becomes unsuccessful. There are two approaches to tackle this problem:

- Simulating the behaviour of a browser by using a headless browser, i.e. PhantomJS or Selenium, loading each page by the browser and passing the data to the scrapper. This way the whole page loads as if a normal user has visited the page and not a crawler.

Digging into the structure of the many online platform and extracting the JavaScript APIs and only crawling them to get very organized structured data. For example, the following link should be able to show latest 144 projects on the Indiegogo (Link , link active on May 1st, 2016). As you can see all the valuable information, including the URL to Fetch the campaign image is included in array of JavaScript objects. This indeed make the scraping easier and requires minimum resources to validate the data.